We use this information to complete transactions, fulfill orders, communicate with individuals placing orders or visiting the online store, and for related purposes. Online Storeįor orders and purchases placed through our online store on this site, we collect order details, name, institution name and address (if applicable), email address, phone number, shipping and billing addresses, credit/debit card information, shipping options and any instructions. We use this information to address the inquiry and respond to the question.

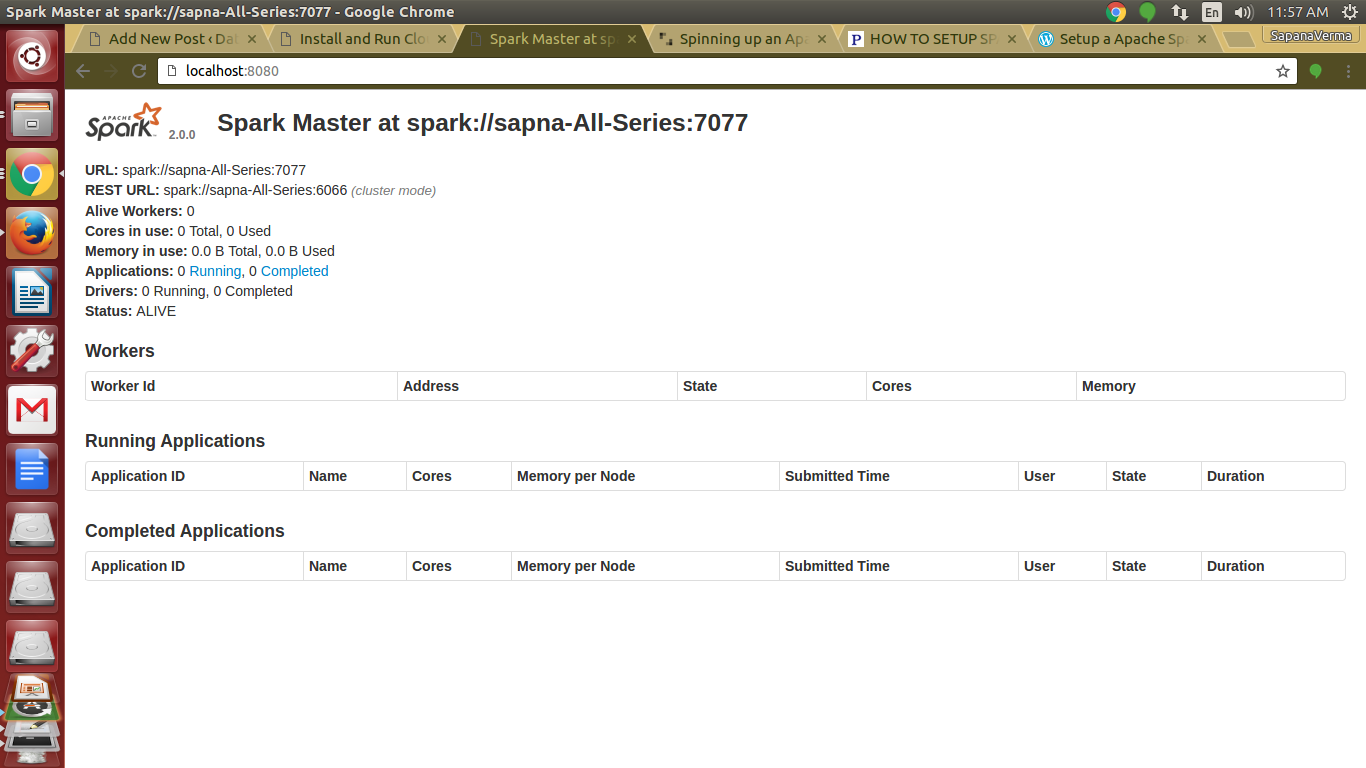

To conduct business and deliver products and services, Pearson collects and uses personal information in several ways in connection with this site, including: Questions and Inquiriesįor inquiries and questions, we collect the inquiry or question, together with name, contact details (email address, phone number and mailing address) and any other additional information voluntarily submitted to us through a Contact Us form or an email. Please note that other Pearson websites and online products and services have their own separate privacy policies. This privacy notice provides an overview of our commitment to privacy and describes how we collect, protect, use and share personal information collected through this site. Pearson Education, Inc., 221 River Street, Hoboken, New Jersey 07030, (Pearson) presents this site to provide information about products and services that can be purchased through this site. I will cover Spark Standalone and Spark on YARN installation examples in this hour because these are the most common deployment modes in use today. In the case of Spark on YARN, this typically involves deploying Spark to an existing Hadoop cluster. In each case, you would need to establish a working YARN or Mesos cluster prior to installing and configuring Spark. Spark on YARN and Spark on Mesos are deployment modes that use the resource schedulers YARN and Mesos respectively. Spark Standalone includes everything you need to get started. With Spark Standalone, you can get up an running quickly with few dependencies or environmental considerations. The term “standalone” simply means it does not need an external scheduler. The term can be confusing because you can have a single machine or a multinode fully distributed cluster both running in Spark Standalone mode. Spark Standalone refers to the built-in or “standalone” scheduler. There are three primary deployment modes for Spark:

INSTALL APACHE SPARK STANDALONE HOW TO

I will also cover how to deploy Spark on Hadoop using the Hadoop scheduler, YARN, discussed in Hour 2.īy the end of this hour, you’ll be up and running with an installation of Spark that you will use in subsequent hours.

INSTALL APACHE SPARK STANDALONE INSTALL

This hour covers the basics about how Spark is deployed and how to install Spark. Now that you’ve gotten through the heavy stuff in the last two hours, you can dive headfirst into Spark and get your hands dirty, so to speak. What the different Spark deployment modes are

0 kommentar(er)

0 kommentar(er)